Microsoft recommends splitting your Landing layer of Data Lake into a separate storage account, and for good reason: You don’t need to expose your Data Lake to all the third parties just because you want them to send their data to you. You can configure a Landing storage account with a different folder & permission structure, then move the landed datasets to your Data Lake when ready. In Fabric’s case, you can use an ADLS Gen2 account as your Landing layer and then move the data onto OneLake.

Depending on how frequently you would like to move the data, you have two options:

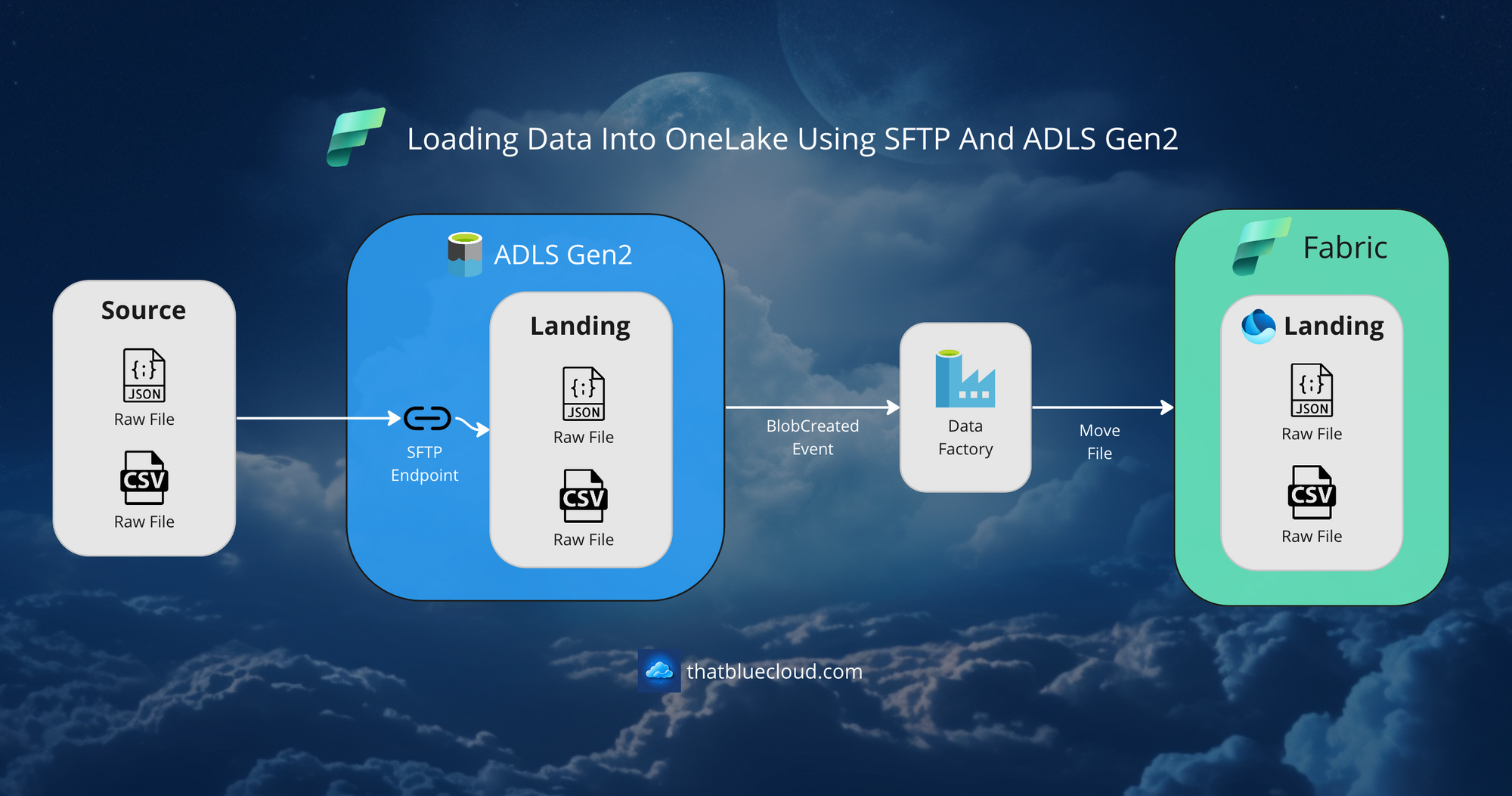

1.Immediate Transfer Using Blob Events and ADF

If you need the data to be immediately transferred to your OneLake, you can use the blob events raised by the storage account and trigger ADF pipelines that would copy your files to the OneLake target. It would be almost instantaneous.

SFTP events are a tad different than regular blob events. You would need to filter your blob events raised by your storage account using the data.api property and only include SftpCommit events, so you wouldn’t get an empty file when SFTP first creates it. This way, you would get the event only when SFTP finishes the file upload and commits the file. Details are available in the articles here and here.

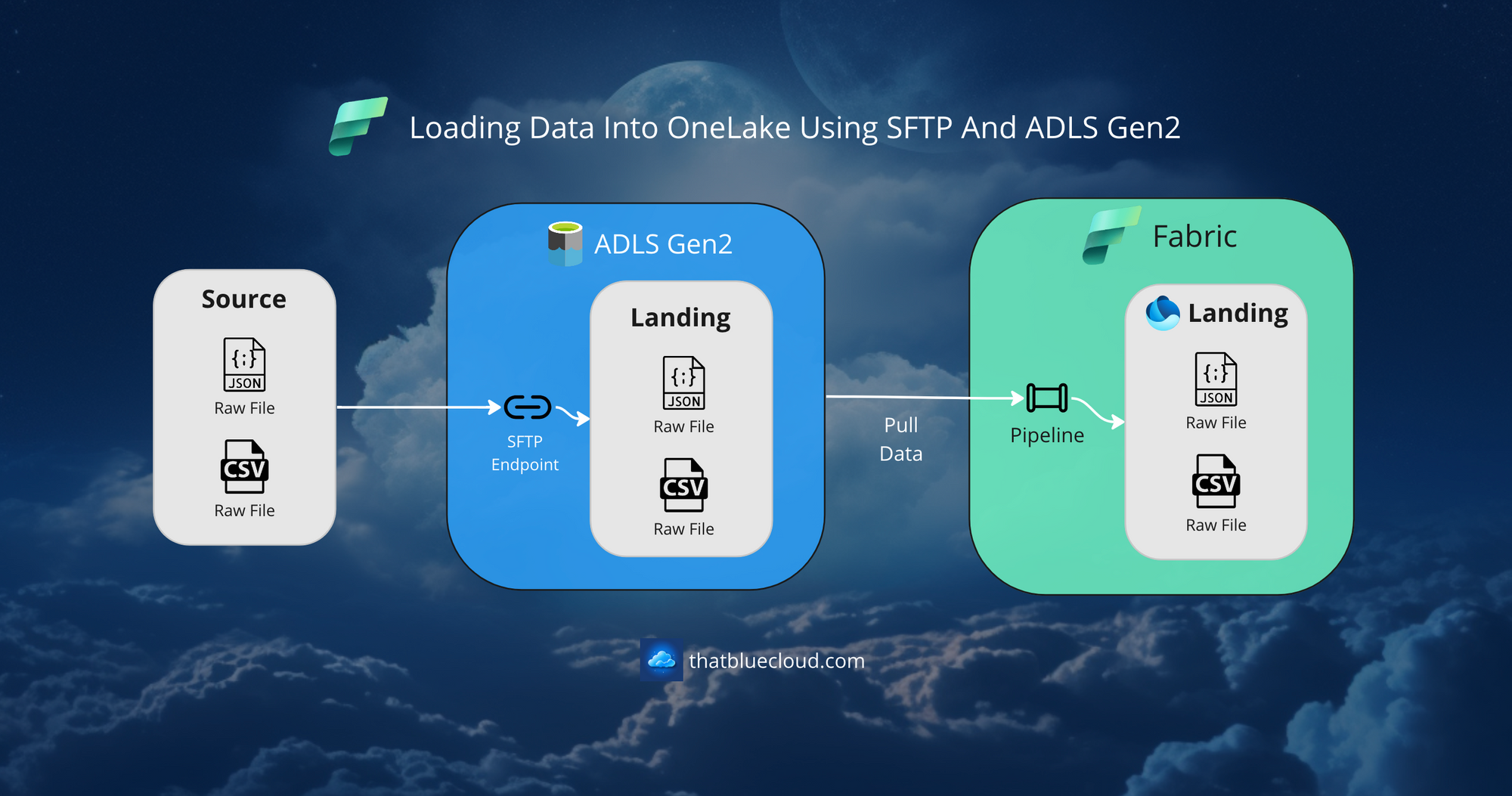

2.Scheduled Transfer Using Fabric Pipelines

Alternatively, you can transfer the data from your Landing account to your OneLake on a schedule, like daily jobs. It’s useful if you don’t want to process the data as it comes and would like to process it in batch. That would cost less and with less monitoring effort. Fabric Pipelines and Dataflows can achieve this very easily.

Sadly, Fabric Pipelines don’t support event triggers yet, so you can’t trigger your pipelines using blob events.

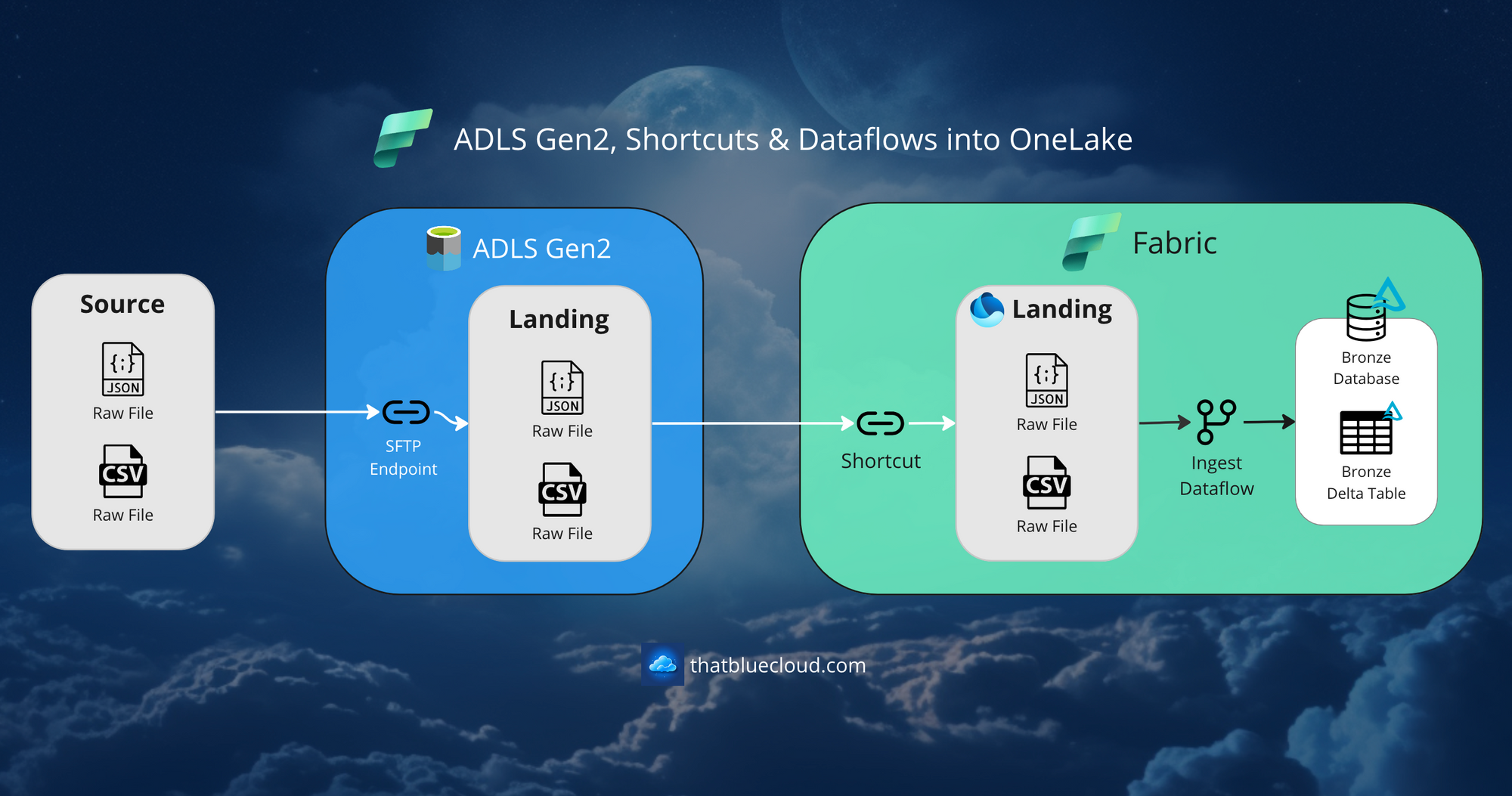

Bonus: Use Dataflows and transform to Bronze directly

If you don’t want to keep the files in the original format by copying them into OneLake with binary copy, you can use Shortcuts to link Landing to your OneLake. That way, you can directly use Dataflows and transform the data on the air, allowing you to write directly into your Bronze layer in Delta format.

Or, you can directly connect to the Landing account using the ADLS Gen2 adapter within the Dataflows if you don’t fancy Shortcuts. That should achieve the goal the same way.

Keep in mind that this approach would only work for Scheduled jobs. You can’t trigger Fabric Pipelines or Dataflows using events.

Conclusion

There are many flavours of ingesting data into Fabric, but SFTP is a frequent use case if you work with third-party integrations. It’s very secure and versatile, and with PGP encryption added to it, it’s hard to beat.

How do you plan to handle SFTP requirements in your Fabric tenant? Talk to me in the comments!