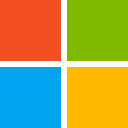

1. Zero Copy Table Clones

I’m really excited about this feature. Think that you create a clone of an existing table with no-actual data copying happening. I’m eagerly awaiting to dive into it in detail, but apparently this is how it works: Your table metadata is cloned, and your parquet file is forked at the time of the request. You’re able to read and alter the data in the cloned table without affecting the source table, and vice versa. There’s no extra storage consumption, unlike the traditional table copying with the use of SELECT INTO statements.

You can find the announcement here, and details on the relevant Microsoft Learn page.

2. Automatic Update for Statistics

In database and warehouse technologies, statistics are an important part of execution plan calculation. They guide the engine to find the best path among multiple execution paths in the tree, and they are calculated based on your data. If the statistics are not calculated correctly or are stale, your query might not run as efficiently as you would expect it to.

Fabric’s SQL engine (both SQL endpoint and Warehouse) now supports automatic updates of the statistics. Details about the announcement are here, and you can also check the relevantMicrosoft Learn page for more technical information.

3. Share A Lakehouse Without Access To Workspace

You can now grant people access to your Lakehouse without giving them access to your workspace. This would allow those users to see your Lakehouse and query the data through it, but not see anything else in the workspace (unless they have explicit permission for those items as well). Apparently, it also gives permission to the SQL endpoint and the default Dataset of the shared Lakehouse.

It can come in quite handy in a lot of multi-team scenarios, and can certainly reduce the clutter in the hub. It would also reduce the security risk, increase control over what’s shared and what isn’t.

Here’s the link for the announcement.

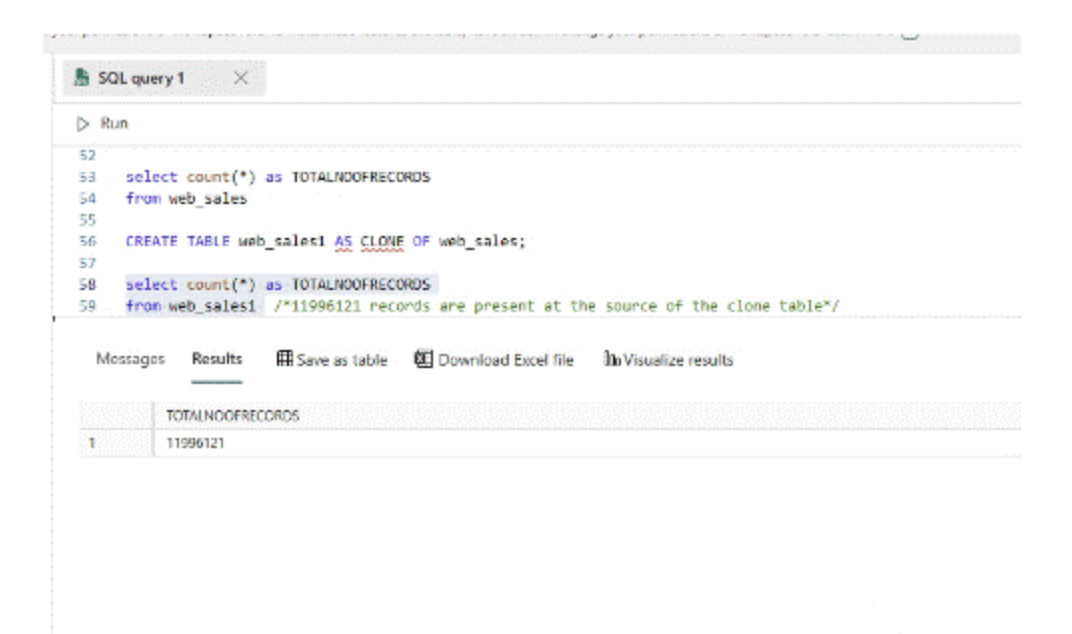

4. Continuous Connection from Event Hubs to KQL Databases

If you’re utilising Event Hubs for your streaming data or event ingestion, good news. Now you can connect it directly to your Fabric tenant and your KQL databases for real-time analytics. This is certainly good news for utilising Event Hubs better and better in Fabric.

There’s a small pre-requisite for achieving this, though:

A pre-requisite for creating a cloud connection in Microsoft Fabric is to set a share access policy (SAS) on the event hub and collect information to be used later in the setting up the cloud connection.

Here’s the link for further details.

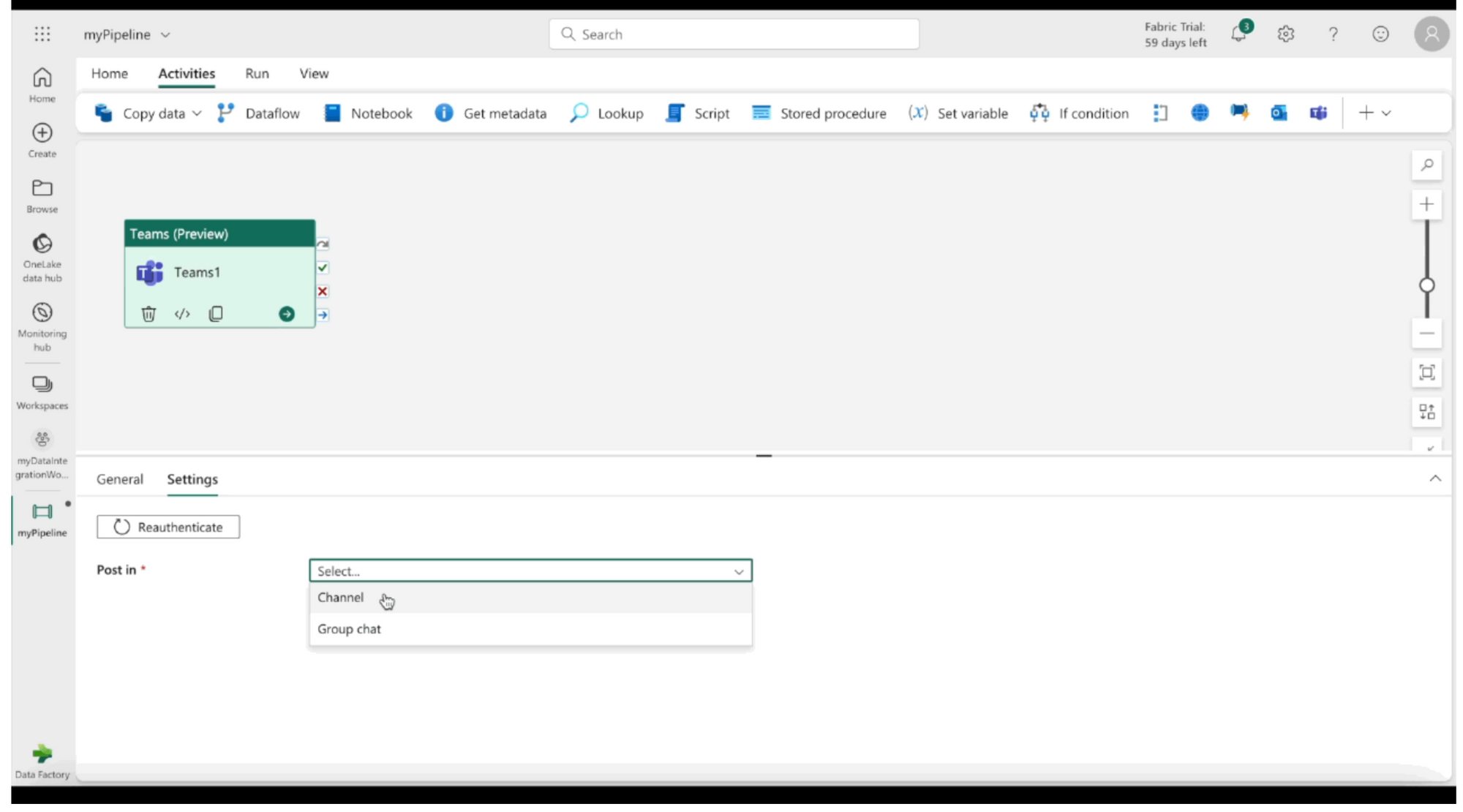

5. Send Message to Teams in Your Data Pipelines

If you wanted to send a message to a Teams channel after your pipeline runs, or maybe if there’s an issue, this is for you. Now, you can do exactly that, using the new Teams Activity in your pipeline.

More details are here.

Conclusion

The full list of changes, which has over 60 new features/updates, is below. Don’t forget to check it out!